#kubernetes api

Explore tagged Tumblr posts

Text

Ready to future-proof your applications and boost performance? Discover how PHP microservices can transform your development workflow! 💡

In this powerful guide, you'll learn: ✅ What PHP Microservices Architecture really means ✅ How to break a monolithic app into modular services ✅ Best tools for containerization like Docker & Kubernetes ✅ API Gateway strategies and service discovery techniques ✅ Tips on error handling, security, and performance optimization

With real-world examples and practical steps, this guide is perfect for developers and teams aiming for faster deployment, independent scaling, and simplified maintenance.

🎯 Whether you’re a solo developer or scaling a product, understanding microservices is the key to next-level architecture.

🌐 Brought to you by Orbitwebtech, Best Web Development Company in the USA, helping businesses build powerful and scalable web solutions.

📖 Start reading now and give your PHP projects a cutting-edge upgrade!

2 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

"Building Microservices" by Sam Newman is a definitive guide to designing, developing, and deploying microservices-based architectures. The book provides a deep understanding of the principles, patterns, and practices required to build scalable, maintainable, and resilient microservices. Below is a user-friendly, step-by-step breakdown of the key outcomes and takeaways from the book, designed to help readers understand and apply microservices effectively.

#Microservices#SoftwareArchitecture#DevOps#CloudComputing#MicroservicesArchitecture#DistributedSystems#SoftwareDevelopment#APIs#MicroservicesDesign#TechTutorial#BackendDevelopment#DevOpsCulture#Containerization#Kubernetes#Docker#CloudNative#MicroservicesDevelopment#APIManagement#ScalableSystems#TechBooks#SoftwareEngineering#AgileDevelopment#CI/CD#Serverless#TechTrends#SoftwareDeployment

0 notes

Text

A Comprehensive Guide to Building Microservices with Node.js

Introduction:The microservices architecture has become a popular approach for developing scalable and maintainable applications. Unlike monolithic architectures, where all components are tightly coupled, microservices allow you to break down an application into smaller, independent services that can be developed, deployed, and scaled independently. Node.js, with its asynchronous, event-driven…

0 notes

Text

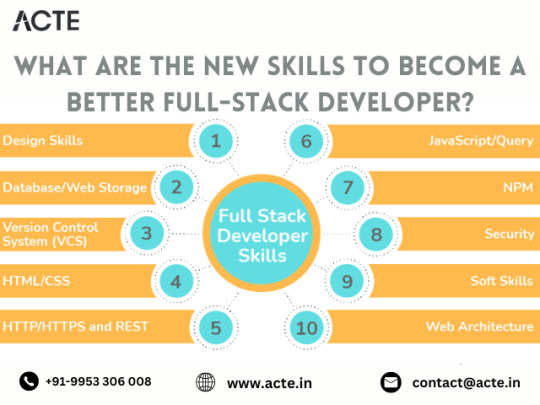

Unraveling the 5 Layers of Software Development

In the realm of software development services, every application is built upon a foundation of interconnected layers, each serving a specific purpose in delivering functionality to end-users. Understanding these layers and the technologies that power them is crucial for developers aiming to create robust and efficient software solutions. In this blog, we'll explore the five key layers of software architecture: User Interface (UI), Application Programming Interface (API), Database (DB), Business Logic, and Hosting, along with examples of technologies commonly used in each layer.

User Interface (UI): The UI layer is what users interact with directly. It encompasses everything from the visual design to the user experience (UX). Technologies used in this layer focus on creating intuitive, responsive, and aesthetically pleasing interfaces. Some popular UI Design technologies include:

HTML/CSS/JavaScript: These front-end technologies form the backbone of web-based UIs. HTML defines the structure, CSS styles the elements, and JavaScript adds interactivity.

React.js/Vue.js/Angular: These JavaScript frameworks are used to build dynamic and interactive user interfaces for web applications.

Swift/Kotlin: For mobile application development, languages like Swift (for iOS) and Kotlin (for Android) are used to develop native user interfaces.

Application Programming Interface (API): The API layer acts as an intermediary between the UI and the business logic, enabling communication and data exchange. APIs define the endpoints and protocols through which different software components interact. Common technologies used in API development services include:

RESTful APIs: Representational State Transfer (REST) is a popular architectural style for designing networked applications. RESTful APIs use HTTP methods like GET, POST, PUT, and DELETE to perform operations on resources.

GraphQL: An alternative to REST, GraphQL provides a more flexible and efficient approach to querying and manipulating data. It allows clients to request only the data they need, reducing over-fetching and under-fetching.

Express.js/Django/Rails: Frameworks like Express.js (for Node.js), Django (for Python), and Rails (for Ruby) are commonly used to build web APIs quickly and efficiently.

Database (DB): The database layer is responsible for storing, retrieving, and managing data. It provides a persistent storage solution for an application's information. Various types of databases exist, including relational databases, NoSQL databases, and in-memory databases. Some popular database technologies include:

MySQL/PostgreSQL: Relational database management systems (RDBMS) like MySQL and PostgreSQL are widely used for structured data storage and management.

MongoDB: A popular NoSQL database, MongoDB is designed for storing unstructured or semi-structured data in JSON-like documents.

Redis: An in-memory data structure store, Redis is often used as a caching layer or for real-time data processing.

Business Logic: The business logic layer contains the application's core functionality and rules. It processes requests from the UI, interacts with the database, and performs the necessary operations to fulfill user actions. While business logic can be implemented in various programming languages, some technologies commonly used for this layer include:

Java/C#: Object-oriented languages like Java and C# are often chosen for building robust and scalable business logic components.

Node.js/Python: JavaScript (with Node.js) and Python are also popular choices, especially for applications requiring agility and rapid development.

Spring/.NET Core: Frameworks like Spring (for Java) and .NET Core (for C#) provide tools and libraries for building enterprise-grade business logic components.

Hosting: The hosting layer encompasses the infrastructure and environment where the application runs. It includes servers, cloud platforms, containers, and other deployment options. Popular hosting technologies and platforms include:

Amazon Web Services (AWS)/Microsoft Azure/Google Cloud Platform (GCP): These cloud service providers offer a range of hosting solutions, including virtual machines, containers, and serverless computing.

Docker/Kubernetes: Containerization technologies like Docker and orchestration platforms like Kubernetes provide efficient ways to package, deploy, and manage applications across different environments.

Heroku/Netlify/Vercel: These platforms offer simplified hosting solutions specifically tailored for web applications, providing features like continuous deployment, scalability, and managed infrastructure.

In conclusion, navigating the various layers of software architecture requires a comprehensive understanding of each layer's purpose and the technologies that power them. By leveraging the right technologies for UI, API, DB, logic, and hosting, developers can build robust, scalable, and maintainable software solutions that meet the needs of modern users and businesses.

#webdesign#mobileappdevelopment#appdevelopment#web developers#webdevelopment#youtube#apiintegration#thememakker#webdevelopmentcompany#hosting#database#serverless computing#api#uiuxdesign#ui#ux#aws#ror#docker#java#kubernetes#hire developers#webservices

0 notes

Text

Mantén tu Kubernetes actualizado: Detecta APIs obsoletas con Pluto

¿Te preocupa que tu clúster de Kubernetes esté utilizando APIs obsoletas que podrían causar problemas de seguridad o compatibilidad en el futuro? Aquí es donde Pluto entra en escena. Pluto: Tu guía para APIs saludables en Kubernetes Pluto es una herramienta desarrollada por Fairwinds que te ayuda a identificar y actualizar las versiones de API de Kubernetes (apiVersions) obsoletas en tu código y…

View On WordPress

0 notes

Text

Tips to Boost Release Confidence in Kubernetes

Software development takes a lot of focus and practice, and many newcomers find the thought of releasing a product into the world a bit daunting. All kinds of worries and fears can crop up before release, and even when unfounded, doubt can make it difficult to pull the trigger.

If you’re using a solution like Kubernetes software to develop and release your next project, below are some tips to boost your confidence and get your product released for the world to enjoy:

Work With a Mentor

Having a mentor on your side can be a big confidence booster when it comes to Kubernetes software. Mentors provide not only guidance and advice, but they can also boost your confidence by sharing stories of their own trials. Finding a mentor who specializes in Kubernetes is ideal if this is the container orchestration system you’re working with, but a mentor with experience in any type of software development product release can be beneficial.

Take a Moment Away From Your Project

In any type of intensive development project, it can be easy to lose sight of the bigger picture. Many developers find themselves working longer hours as the release of a product grows near, and this can contribute to stress, worry and doubt.

When possible, take some time to step away from your work for a bit. If you can put your project down for a few days to get your mind off of things, this will provide you with some time to relax and come back to your project with a fresh set of eyes and a clear mind.

Ask for a Review

You can also ask trusted friends and colleagues to review your work before release. This may not be a full-on bug hunt, but it can help you have confidence that the main parameters are working fine and that no glaring issues exist. You can also ask for general feedback, but be careful not to let the opinions of others sway you from your overall mission of developing a stellar product that fulfills your vision.

Read a similar article about Kubernetes dev environments here at this page.

0 notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

Top 10 In- Demand Tech Jobs in 2025

Technology is growing faster than ever, and so is the need for skilled professionals in the field. From artificial intelligence to cloud computing, businesses are looking for experts who can keep up with the latest advancements. These tech jobs not only pay well but also offer great career growth and exciting challenges.

In this blog, we’ll look at the top 10 tech jobs that are in high demand today. Whether you’re starting your career or thinking of learning new skills, these jobs can help you plan a bright future in the tech world.

1. AI and Machine Learning Specialists

Artificial Intelligence (AI) and Machine Learning are changing the game by helping machines learn and improve on their own without needing step-by-step instructions. They’re being used in many areas, like chatbots, spotting fraud, and predicting trends.

Key Skills: Python, TensorFlow, PyTorch, data analysis, deep learning, and natural language processing (NLP).

Industries Hiring: Healthcare, finance, retail, and manufacturing.

Career Tip: Keep up with AI and machine learning by working on projects and getting an AI certification. Joining AI hackathons helps you learn and meet others in the field.

2. Data Scientists

Data scientists work with large sets of data to find patterns, trends, and useful insights that help businesses make smart decisions. They play a key role in everything from personalized marketing to predicting health outcomes.

Key Skills: Data visualization, statistical analysis, R, Python, SQL, and data mining.

Industries Hiring: E-commerce, telecommunications, and pharmaceuticals.

Career Tip: Work with real-world data and build a strong portfolio to showcase your skills. Earning certifications in data science tools can help you stand out.

3. Cloud Computing Engineers: These professionals create and manage cloud systems that allow businesses to store data and run apps without needing physical servers, making operations more efficient.

Key Skills: AWS, Azure, Google Cloud Platform (GCP), DevOps, and containerization (Docker, Kubernetes).

Industries Hiring: IT services, startups, and enterprises undergoing digital transformation.

Career Tip: Get certified in cloud platforms like AWS (e.g., AWS Certified Solutions Architect).

4. Cybersecurity Experts

Cybersecurity professionals protect companies from data breaches, malware, and other online threats. As remote work grows, keeping digital information safe is more crucial than ever.

Key Skills: Ethical hacking, penetration testing, risk management, and cybersecurity tools.

Industries Hiring: Banking, IT, and government agencies.

Career Tip: Stay updated on new cybersecurity threats and trends. Certifications like CEH (Certified Ethical Hacker) or CISSP (Certified Information Systems Security Professional) can help you advance in your career.

5. Full-Stack Developers

Full-stack developers are skilled programmers who can work on both the front-end (what users see) and the back-end (server and database) of web applications.

Key Skills: JavaScript, React, Node.js, HTML/CSS, and APIs.

Industries Hiring: Tech startups, e-commerce, and digital media.

Career Tip: Create a strong GitHub profile with projects that highlight your full-stack skills. Learn popular frameworks like React Native to expand into mobile app development.

6. DevOps Engineers

DevOps engineers help make software faster and more reliable by connecting development and operations teams. They streamline the process for quicker deployments.

Key Skills: CI/CD pipelines, automation tools, scripting, and system administration.

Industries Hiring: SaaS companies, cloud service providers, and enterprise IT.

Career Tip: Earn key tools like Jenkins, Ansible, and Kubernetes, and develop scripting skills in languages like Bash or Python. Earning a DevOps certification is a plus and can enhance your expertise in the field.

7. Blockchain Developers

They build secure, transparent, and unchangeable systems. Blockchain is not just for cryptocurrencies; it’s also used in tracking supply chains, managing healthcare records, and even in voting systems.

Key Skills: Solidity, Ethereum, smart contracts, cryptography, and DApp development.

Industries Hiring: Fintech, logistics, and healthcare.

Career Tip: Create and share your own blockchain projects to show your skills. Joining blockchain communities can help you learn more and connect with others in the field.

8. Robotics Engineers

Robotics engineers design, build, and program robots to do tasks faster or safer than humans. Their work is especially important in industries like manufacturing and healthcare.

Key Skills: Programming (C++, Python), robotics process automation (RPA), and mechanical engineering.

Industries Hiring: Automotive, healthcare, and logistics.

Career Tip: Stay updated on new trends like self-driving cars and AI in robotics.

9. Internet of Things (IoT) Specialists

IoT specialists work on systems that connect devices to the internet, allowing them to communicate and be controlled easily. This is crucial for creating smart cities, homes, and industries.

Key Skills: Embedded systems, wireless communication protocols, data analytics, and IoT platforms.

Industries Hiring: Consumer electronics, automotive, and smart city projects.

Career Tip: Create IoT prototypes and learn to use platforms like AWS IoT or Microsoft Azure IoT. Stay updated on 5G technology and edge computing trends.

10. Product Managers

Product managers oversee the development of products, from idea to launch, making sure they are both technically possible and meet market demands. They connect technical teams with business stakeholders.

Key Skills: Agile methodologies, market research, UX design, and project management.

Industries Hiring: Software development, e-commerce, and SaaS companies.

Career Tip: Work on improving your communication and leadership skills. Getting certifications like PMP (Project Management Professional) or CSPO (Certified Scrum Product Owner) can help you advance.

Importance of Upskilling in the Tech Industry

Stay Up-to-Date: Technology changes fast, and learning new skills helps you keep up with the latest trends and tools.

Grow in Your Career: By learning new skills, you open doors to better job opportunities and promotions.

Earn a Higher Salary: The more skills you have, the more valuable you are to employers, which can lead to higher-paying jobs.

Feel More Confident: Learning new things makes you feel more prepared and ready to take on tougher tasks.

Adapt to Changes: Technology keeps evolving, and upskilling helps you stay flexible and ready for any new changes in the industry.

Top Companies Hiring for These Roles

Global Tech Giants: Google, Microsoft, Amazon, and IBM.

Startups: Fintech, health tech, and AI-based startups are often at the forefront of innovation.

Consulting Firms: Companies like Accenture, Deloitte, and PwC increasingly seek tech talent.

In conclusion, the tech world is constantly changing, and staying updated is key to having a successful career. In 2025, jobs in fields like AI, cybersecurity, data science, and software development will be in high demand. By learning the right skills and keeping up with new trends, you can prepare yourself for these exciting roles. Whether you're just starting or looking to improve your skills, the tech industry offers many opportunities for growth and success.

#Top 10 Tech Jobs in 2025#In- Demand Tech Jobs#High paying Tech Jobs#artificial intelligence#datascience#cybersecurity

2 notes

·

View notes

Text

Cloud Agnostic: Achieving Flexibility and Independence in Cloud Management

As businesses increasingly migrate to the cloud, they face a critical decision: which cloud provider to choose? While AWS, Microsoft Azure, and Google Cloud offer powerful platforms, the concept of "cloud agnostic" is gaining traction. Cloud agnosticism refers to a strategy where businesses avoid vendor lock-in by designing applications and infrastructure that work across multiple cloud providers. This approach provides flexibility, independence, and resilience, allowing organizations to adapt to changing needs and avoid reliance on a single provider.

What Does It Mean to Be Cloud Agnostic?

Being cloud agnostic means creating and managing systems, applications, and services that can run on any cloud platform. Instead of committing to a single cloud provider, businesses design their architecture to function seamlessly across multiple platforms. This flexibility is achieved by using open standards, containerization technologies like Docker, and orchestration tools such as Kubernetes.

Key features of a cloud agnostic approach include:

Interoperability: Applications must be able to operate across different cloud environments.

Portability: The ability to migrate workloads between different providers without significant reconfiguration.

Standardization: Using common frameworks, APIs, and languages that work universally across platforms.

Benefits of Cloud Agnostic Strategies

Avoiding Vendor Lock-InThe primary benefit of being cloud agnostic is avoiding vendor lock-in. Once a business builds its entire infrastructure around a single cloud provider, it can be challenging to switch or expand to other platforms. This could lead to increased costs and limited innovation. With a cloud agnostic strategy, businesses can choose the best services from multiple providers, optimizing both performance and costs.

Cost OptimizationCloud agnosticism allows companies to choose the most cost-effective solutions across providers. As cloud pricing models are complex and vary by region and usage, a cloud agnostic system enables businesses to leverage competitive pricing and minimize expenses by shifting workloads to different providers when necessary.

Greater Resilience and UptimeBy operating across multiple cloud platforms, organizations reduce the risk of downtime. If one provider experiences an outage, the business can shift workloads to another platform, ensuring continuous service availability. This redundancy builds resilience, ensuring high availability in critical systems.

Flexibility and ScalabilityA cloud agnostic approach gives companies the freedom to adjust resources based on current business needs. This means scaling applications horizontally or vertically across different providers without being restricted by the limits or offerings of a single cloud vendor.

Global ReachDifferent cloud providers have varying levels of presence across geographic regions. With a cloud agnostic approach, businesses can leverage the strengths of various providers in different areas, ensuring better latency, performance, and compliance with local regulations.

Challenges of Cloud Agnosticism

Despite the advantages, adopting a cloud agnostic approach comes with its own set of challenges:

Increased ComplexityManaging and orchestrating services across multiple cloud providers is more complex than relying on a single vendor. Businesses need robust management tools, monitoring systems, and teams with expertise in multiple cloud environments to ensure smooth operations.

Higher Initial CostsThe upfront costs of designing a cloud agnostic architecture can be higher than those of a single-provider system. Developing portable applications and investing in technologies like Kubernetes or Terraform requires significant time and resources.

Limited Use of Provider-Specific ServicesCloud providers often offer unique, advanced services—such as machine learning tools, proprietary databases, and analytics platforms—that may not be easily portable to other clouds. Being cloud agnostic could mean missing out on some of these specialized services, which may limit innovation in certain areas.

Tools and Technologies for Cloud Agnostic Strategies

Several tools and technologies make cloud agnosticism more accessible for businesses:

Containerization: Docker and similar containerization tools allow businesses to encapsulate applications in lightweight, portable containers that run consistently across various environments.

Orchestration: Kubernetes is a leading tool for orchestrating containers across multiple cloud platforms. It ensures scalability, load balancing, and failover capabilities, regardless of the underlying cloud infrastructure.

Infrastructure as Code (IaC): Tools like Terraform and Ansible enable businesses to define cloud infrastructure using code. This makes it easier to manage, replicate, and migrate infrastructure across different providers.

APIs and Abstraction Layers: Using APIs and abstraction layers helps standardize interactions between applications and different cloud platforms, enabling smooth interoperability.

When Should You Consider a Cloud Agnostic Approach?

A cloud agnostic approach is not always necessary for every business. Here are a few scenarios where adopting cloud agnosticism makes sense:

Businesses operating in regulated industries that need to maintain compliance across multiple regions.

Companies require high availability and fault tolerance across different cloud platforms for mission-critical applications.

Organizations with global operations that need to optimize performance and cost across multiple cloud regions.

Businesses aim to avoid long-term vendor lock-in and maintain flexibility for future growth and scaling needs.

Conclusion

Adopting a cloud agnostic strategy offers businesses unparalleled flexibility, independence, and resilience in cloud management. While the approach comes with challenges such as increased complexity and higher upfront costs, the long-term benefits of avoiding vendor lock-in, optimizing costs, and enhancing scalability are significant. By leveraging the right tools and technologies, businesses can achieve a truly cloud-agnostic architecture that supports innovation and growth in a competitive landscape.

Embrace the cloud agnostic approach to future-proof your business operations and stay ahead in the ever-evolving digital world.

2 notes

·

View notes

Text

Is cPanel on Its Deathbed? A Tale of Technology, Profits, and a Slow-Moving Train Wreck

Ah, cPanel. The go-to control panel for many web hosting services since the dawn of, well, web hosting. Once the epitome of innovation, it’s now akin to a grizzled war veteran, limping along with a cane and wearing an “I Survived Y2K” t-shirt. So what went wrong? Let’s dive into this slow-moving technological telenovela, rife with corporate greed, security loopholes, and a legacy that may be hanging by a thread.

Chapter 1: A Brief, Glorious History (Or How cPanel Shot to Stardom)

Once upon a time, cPanel was the bee’s knees. Launched in 1996, this software was, for a while, the pinnacle of web management systems. It promised simplicity, reliability, and functionality. Oh, the golden years!

Chapter 2: The Tech Stack Tortoise

In the fast-paced world of technology, being stagnant is synonymous with being extinct. While newer tech stacks are integrating AI, machine learning, and all sorts of jazzy things, cPanel seems to be stuck in a time warp. Why? Because the tech stack is more outdated than a pair of bell-bottom trousers. No Docker, no Kubernetes, and don’t even get me started on the lack of robust API support.

Chapter 3: “The Corpulent Corporate”

In 2018, Oakley Capital, a private equity firm, acquired cPanel. For many, this was the beginning of the end. Pricing structures were jumbled, turning into a monetisation extravaganza. It’s like turning your grandma’s humble pie shop into a mass production line for rubbery, soulless pies. They’ve squeezed every ounce of profit from it, often at the expense of the end-users and smaller hosting companies.

Chapter 4: Security—or the Lack Thereof

Ah, the elephant in the room. cPanel has had its fair share of vulnerabilities. Whether it’s SQL injection flaws, privilege escalation, or simple, plain-text passwords (yes, you heard right), cPanel often appears in the headlines for all the wrong reasons. It’s like that dodgy uncle at family reunions who always manages to spill wine on the carpet; you know he’s going to mess up, yet somehow he’s always invited.

Chapter 5: The (Dis)loyal Subjects—The Hosting Companies

Remember those hosting companies that once swore by cPanel? Well, let’s just say some of them have been seen flirting with competitors at the bar. Newer, shinier control panels are coming to market, offering modern tech stacks and, gasp, lower prices! It’s like watching cPanel’s loyal subjects slowly turn their backs, one by one.

Chapter 6: The Alternatives—Not Just a Rebellion, but a Revolution

Plesk, Webmin, DirectAdmin, oh my! New players are rising, offering updated tech stacks, more customizable APIs, and—wait for it—better security protocols. They’re the Han Solos to cPanel’s Jabba the Hutt: faster, sleeker, and without the constant drooling.

Conclusion: The Twilight Years or a Second Wind?

The debate rages on. Is cPanel merely an ageing actor waiting for its swan song, or can it adapt and evolve, perhaps surprising us all? Either way, the story of cPanel serves as a cautionary tale: adapt or die. And for heaven’s sake, update your tech stack before it becomes a relic in a technology museum, right between floppy disks and dial-up modems.

This outline only scratches the surface, but it’s a start. If cPanel wants to avoid becoming the Betamax of web management systems, it better start evolving—stat. Cheers!

#hosting#wordpress#cpanel#webdesign#servers#websites#webdeveloper#technology#tech#website#developer#digitalagency#uk#ukdeals#ukbusiness#smallbussinessowner

14 notes

·

View notes

Text

Elevating Your Full-Stack Developer Expertise: Exploring Emerging Skills and Technologies

Introduction: In the dynamic landscape of web development, staying at the forefront requires continuous learning and adaptation. Full-stack developers play a pivotal role in crafting modern web applications, balancing frontend finesse with backend robustness. This guide delves into the evolving skills and technologies that can propel full-stack developers to new heights of expertise and innovation.

Pioneering Progress: Key Skills for Full-Stack Developers

1. Innovating with Microservices Architecture:

Microservices have redefined application development, offering scalability and flexibility in the face of complexity. Mastery of frameworks like Kubernetes and Docker empowers developers to architect, deploy, and manage microservices efficiently. By breaking down monolithic applications into modular components, developers can iterate rapidly and respond to changing requirements with agility.

2. Embracing Serverless Computing:

The advent of serverless architecture has revolutionized infrastructure management, freeing developers from the burdens of server maintenance. Platforms such as AWS Lambda and Azure Functions enable developers to focus solely on code development, driving efficiency and cost-effectiveness. Embrace serverless computing to build scalable, event-driven applications that adapt seamlessly to fluctuating workloads.

3. Crafting Progressive Web Experiences (PWEs):

Progressive Web Apps (PWAs) herald a new era of web development, delivering native app-like experiences within the browser. Harness the power of technologies like Service Workers and Web App Manifests to create PWAs that are fast, reliable, and engaging. With features like offline functionality and push notifications, PWAs blur the lines between web and mobile, captivating users and enhancing engagement.

4. Harnessing GraphQL for Flexible Data Management:

GraphQL has emerged as a versatile alternative to RESTful APIs, offering a unified interface for data fetching and manipulation. Dive into GraphQL's intuitive query language and schema-driven approach to simplify data interactions and optimize performance. With GraphQL, developers can fetch precisely the data they need, minimizing overhead and maximizing efficiency.

5. Unlocking Potential with Jamstack Development:

Jamstack architecture empowers developers to build fast, secure, and scalable web applications using modern tools and practices. Explore frameworks like Gatsby and Next.js to leverage pre-rendering, serverless functions, and CDN caching. By decoupling frontend presentation from backend logic, Jamstack enables developers to deliver blazing-fast experiences that delight users and drive engagement.

6. Integrating Headless CMS for Content Flexibility:

Headless CMS platforms offer developers unprecedented control over content management, enabling seamless integration with frontend frameworks. Explore platforms like Contentful and Strapi to decouple content creation from presentation, facilitating dynamic and personalized experiences across channels. With headless CMS, developers can iterate quickly and deliver content-driven applications with ease.

7. Optimizing Single Page Applications (SPAs) for Performance:

Single Page Applications (SPAs) provide immersive user experiences but require careful optimization to ensure performance and responsiveness. Implement techniques like lazy loading and server-side rendering to minimize load times and enhance interactivity. By optimizing resource delivery and prioritizing critical content, developers can create SPAs that deliver a seamless and engaging user experience.

8. Infusing Intelligence with Machine Learning and AI:

Machine learning and artificial intelligence open new frontiers for full-stack developers, enabling intelligent features and personalized experiences. Dive into frameworks like TensorFlow.js and PyTorch.js to build recommendation systems, predictive analytics, and natural language processing capabilities. By harnessing the power of machine learning, developers can create smarter, more adaptive applications that anticipate user needs and preferences.

9. Safeguarding Applications with Cybersecurity Best Practices:

As cyber threats continue to evolve, cybersecurity remains a critical concern for developers and organizations alike. Stay informed about common vulnerabilities and adhere to best practices for securing applications and user data. By implementing robust security measures and proactive monitoring, developers can protect against potential threats and safeguard the integrity of their applications.

10. Streamlining Development with CI/CD Pipelines:

Continuous Integration and Deployment (CI/CD) pipelines are essential for accelerating development workflows and ensuring code quality and reliability. Explore tools like Jenkins, CircleCI, and GitLab CI/CD to automate testing, integration, and deployment processes. By embracing CI/CD best practices, developers can deliver updates and features with confidence, driving innovation and agility in their development cycles.

#full stack developer#education#information#full stack web development#front end development#web development#frameworks#technology#backend#full stack developer course

2 notes

·

View notes

Text

How DNS-Based Endpoints Enhance Security in GKE Clusters

DNS-Based Endpoints

In order to prevent unwanted access while maintaining cluster management, it is crucial to restrict access to the cluster control plane, which processes Kubernetes API calls, as you are aware if you use Google Kubernetes Engine (GKE).

Authorized networks and turning off public endpoints were the two main ways that GKE used to secure the control plane. However, accessing the cluster may be challenging when employing these techniques. To obtain access through the cluster’s private network, you need to come up with innovative solutions like bastion hosts, and the list of permitted networks needs to be updated for every cluster.

Google Cloud is presenting a new DNS-based endpoint for GKE clusters today, which offers more security restrictions and access method flexibility. All clusters have the DNS-based endpoint available today, irrespective of cluster configuration or version. Several of the present issues with Kubernetes control plane access are resolved with the new DNS-based endpoint, including:

Complex allowlist and firewall setups based on IP: ACLs and approved network configurations based on IP addresses are vulnerable to human setup error.

IP-based static configurations: You must adjust the approved network IP firewall configuration in accordance with changes in network configuration and IP ranges.

Proxy/bastion hosts: You must set up a proxy or bastion host if you are accessing the GKE control plane from a different cloud location, a distant network, or a VPC that is not the same as the VPC where the cluster is located.

Due to these difficulties, GKE clients now have to deal with a complicated configuration and a perplexing user experience.

Introducing a new DNS-based endpoint

Any network that can connect to Google Cloud APIs, such as VPC networks, on-premises networks, or other cloud networks, can access the frontend that the DNS name resolves to. This front-end Each cluster control plane has its own DNS or fully qualified domain name (FQDN) with the new DNS-based endpoint for GKE routes traffic to your cluster after using security policies to block unwanted traffic.Image credit to Google cloud

This strategy has several advantages:

Simple flexible access from anywhere

Proxy nodes and bastion hosts are not required when using the DNS-based endpoint. Without using proxies, authorized users can access your control plane from various clouds, on-premises deployments, or from their homes. Transiting various VPCs is unrestricted with DNS-based endpoints because all that is needed is access to Google APIs. You can still use VPC Service Controls to restrict access to particular networks if you’d like.

Dynamic Security

The same IAM controls that safeguard all GCP API access are also utilized to protect access to your control plane over the DNS-based endpoint. You can make sure that only authorized users, regardless of the IP address or network they use, may access the control plane by implementing identity and access management (IAM) policies. You can easily remove access to a specific identity if necessary, without having to bother about network IP address bounds and configuration. IAM roles can be tailored to the requirements of your company.

See Customize your network isolation for additional information on the precise permissions needed to set up IAM roles, rules, and authentication tokens.

Two layers of security

You may set up network-based controls with VPC Service Controls in addition to IAM policies, giving your cluster control plane a multi-layer security architecture. Context-aware access controls based on network origin and other attributes are added by VPC Service Controls. The security of a private cluster that is only accessible from a VPC network can be equaled.

All Google Cloud APIs use VPC Service Controls, which ensures that your clusters’ security setup matches that of the services and data hosted by all other Google Cloud APIs. For all Google Cloud resources used in a project, you may provide solid assurances for the prevention of illegal access to data and services. Cloud Audit Logs and VPC Service Controls work together to track control plane access.

How to configure DNS-based access

The procedure of setting up DNS-based access for the GKE cluster control plane is simple Check the next steps.

Enable the DNS-based endpoint

Use the following command to enable DNS-based access for a new cluster:

$ gcloud container clusters create $cluster_name –enable-dns-access

As an alternative, use the following command to allow DNS-based access for an existing cluster:

$ gcloud container clusters update $cluster_name –enable-dns-acces

Configure IAM

Requests must be authenticated with a role that has the new IAM authorization in order to access the control plane.

roles/container.developer

roles/container.viewer

Ensure your client can access Google APIs

You must confirm that your client has access to Google APIs if it is connecting from a Google VPC. Activating Private Google Access, which enables clients to connect to Google APIs without using the public internet, is one approach to accomplish this. Each subnet has its own configuration for private Google Access.

Tip: Private Google Access is already enabled for node subnetworks.

[Selective] Setting up access to Google APIs via Private Service Connect

The Private Service Connect for Google APIs endpoint, which is used to access the other Google APIs, can be used to access the DNS endpoint of the cluster. To configure Private Service Connect for Google APIs endpoints, follow the instructions on the Access Google APIs through endpoints page.

Since using a custom endpoint to access the cluster’s DNS is not supported, as detailed in the use an endpoint section, in order to get it to work, you must create a CNAME to “gke.goog” and an A record between “gke.goog” and the private IP allocated to Private Service Connect for Google APIs.

Try DNS access

You can now try DNS-based access. The following command generates a kubeconfig file using the cluster’s DNS address:

gcloud container clusters get-credentials $cluster_name –dns-endpoint

Use kubectl to access your cluster. This allows Cloud Shell to access clusters without a public IP endpoint, previously required a proxy.

Extra security using VPC Service Controls

Additional control plane access security can be added with VPC Service Controls.

What about the IP-based endpoint?

You can test DNS-based control plane access without affecting your clients by using the IP-based endpoint. After you’re satisfied with DNS-based access, disable IP-based access for added security and easier cluster management:

gcloud container clusters update $cluster_name –enable-ip-access=false

Read more on Govindhtech.com

#DNS#Security#GKE#GKEClusters#Kubernetes#API#DNSbased#VPCnetworks#GoogleAPIs#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

1 note

·

View note

Text

Introduction to Kubernetes Gateway API

http://securitytc.com/T0yml4

2 notes

·

View notes

Text

What Web Development Companies Do Differently for Fintech Clients

In the world of financial technology (fintech), innovation moves fast—but so do regulations, user expectations, and cyber threats. Building a fintech platform isn’t like building a regular business website. It requires a deeper understanding of compliance, performance, security, and user trust.

A professional Web Development Company that works with fintech clients follows a very different approach—tailoring everything from architecture to front-end design to meet the demands of the financial sector. So, what exactly do these companies do differently when working with fintech businesses?

Let’s break it down.

1. They Prioritize Security at Every Layer

Fintech platforms handle sensitive financial data—bank account details, personal identification, transaction histories, and more. A single breach can lead to massive financial and reputational damage.

That’s why development companies implement robust, multi-layered security from the ground up:

End-to-end encryption (both in transit and at rest)

Secure authentication (MFA, biometrics, or SSO)

Role-based access control (RBAC)

Real-time intrusion detection systems

Regular security audits and penetration testing

Security isn’t an afterthought—it’s embedded into every decision from architecture to deployment.

2. They Build for Compliance and Regulation

Fintech companies must comply with strict regulatory frameworks like:

PCI-DSS for handling payment data

GDPR and CCPA for user data privacy

KYC/AML requirements for financial onboarding

SOX, SOC 2, and more for enterprise-level platforms

Development teams work closely with compliance officers to ensure:

Data retention and consent mechanisms are implemented

Audit logs are stored securely and access-controlled

Reporting tools are available to meet regulatory checks

APIs and third-party tools also meet compliance standards

This legal alignment ensures the platform is launch-ready—not legally exposed.

3. They Design with User Trust in Mind

For fintech apps, user trust is everything. If your interface feels unsafe or confusing, users won’t even enter their phone number—let alone their banking details.

Fintech-focused development teams create clean, intuitive interfaces that:

Highlight transparency (e.g., fees, transaction histories)

Minimize cognitive load during onboarding

Offer instant confirmations and reassuring microinteractions

Use verified badges, secure design patterns, and trust signals

Every interaction is designed to build confidence and reduce friction.

4. They Optimize for Real-Time Performance

Fintech platforms often deal with real-time transactions—stock trading, payments, lending, crypto exchanges, etc. Slow performance or downtime isn’t just frustrating; it can cost users real money.

Agencies build highly responsive systems by:

Using event-driven architectures with real-time data flows

Integrating WebSockets for live updates (e.g., price changes)

Scaling via cloud-native infrastructure like AWS Lambda or Kubernetes

Leveraging CDNs and edge computing for global delivery

Performance is monitored continuously to ensure sub-second response times—even under load.

5. They Integrate Secure, Scalable APIs

APIs are the backbone of fintech platforms—from payment gateways to credit scoring services, loan underwriting, KYC checks, and more.

Web development companies build secure, scalable API layers that:

Authenticate via OAuth2 or JWT

Throttle requests to prevent abuse

Log every call for auditing and debugging

Easily plug into services like Plaid, Razorpay, Stripe, or banking APIs

They also document everything clearly for internal use or third-party developers who may build on top of your platform.

6. They Embrace Modular, Scalable Architecture

Fintech platforms evolve fast. New features—loan calculators, financial dashboards, user wallets—need to be rolled out frequently without breaking the system.

That’s why agencies use modular architecture principles:

Microservices for independent functionality

Scalable front-end frameworks (React, Angular)

Database sharding for performance at scale

Containerization (e.g., Docker) for easy deployment

This allows features to be developed, tested, and launched independently, enabling faster iteration and innovation.

7. They Build for Cross-Platform Access

Fintech users interact through mobile apps, web portals, embedded widgets, and sometimes even smartwatches. Development companies ensure consistent experiences across all platforms.

They use:

Responsive design with mobile-first approaches

Progressive Web Apps (PWAs) for fast, installable web portals

API-first design for reuse across multiple front-ends

Accessibility features (WCAG compliance) to serve all user groups

Cross-platform readiness expands your market and supports omnichannel experiences.

Conclusion

Fintech development is not just about great design or clean code—it’s about precision, trust, compliance, and performance. From data encryption and real-time APIs to regulatory compliance and user-centric UI, the stakes are much higher than in a standard website build.

That’s why working with a Web Development Company that understands the unique challenges of the financial sector is essential. With the right partner, you get more than a website—you get a secure, scalable, and regulation-ready platform built for real growth in a high-stakes industry.

0 notes

Text

Migrating Virtual Machines to Red Hat OpenShift Virtualization with Ansible Automation Platform

As enterprises modernize their infrastructure, migrating traditional virtual machines (VMs) to container-native platforms is no longer just a trend — it’s a necessity. One of the most powerful solutions for this evolution is Red Hat OpenShift Virtualization, which allows organizations to run VMs side-by-side with containers on a unified Kubernetes platform. When combined with Red Hat Ansible Automation Platform, this migration can be automated, repeatable, and efficient.

In this blog, we’ll explore how enterprises can leverage Ansible to seamlessly migrate workloads from legacy virtualization platforms (like VMware or KVM) to OpenShift Virtualization.

🔍 Why OpenShift Virtualization?

OpenShift Virtualization extends OpenShift’s capabilities to include traditional VMs, enabling:

Unified management of containers and VMs

Native integration with Kubernetes networking and storage

Simplified CI/CD pipelines that include VM-based workloads

Reduction of operational overhead and licensing costs

🛠️ The Role of Ansible Automation Platform

Red Hat Ansible Automation Platform is the glue that binds infrastructure automation, offering:

Agentless automation using SSH or APIs

Pre-built collections for platforms like VMware, OpenShift, KubeVirt, and more

Scalable execution environments for large-scale VM migration

Role-based access and governance through automation controller (formerly Tower)

🧭 Migration Workflow Overview

A typical migration flow using Ansible and OpenShift Virtualization involves:

1. Discovery Phase

Inventory the source VMs using Ansible VMware/KVM modules.

Collect VM configuration, network settings, and storage details.

2. Template Creation

Convert the discovered VM configurations into KubeVirt/OVIRT VM manifests.

Define OpenShift-native templates to match the workload requirements.

3. Image Conversion and Upload

Use tools like virt-v2v or Ansible roles to export VM disk images (VMDK/QCOW2).

Upload to OpenShift using Containerized Data Importer (CDI) or PVCs.

4. VM Deployment

Deploy converted VMs as KubeVirt VirtualMachines via Ansible Playbooks.

Integrate with OpenShift Networking and Storage (Multus, OCS, etc.)

5. Validation & Post-Migration

Run automated smoke tests or app-specific validation.

Integrate monitoring and alerting via Prometheus/Grafana.

- name: Deploy VM on OpenShift Virtualization

hosts: localhost

tasks:

- name: Create PVC for VM disk

k8s:

state: present

definition: "{{ lookup('file', 'vm-pvc.yaml') }}"

- name: Deploy VirtualMachine

k8s:

state: present

definition: "{{ lookup('file', 'vm-definition.yaml') }}"

🔐 Benefits of This Approach

✅ Consistency – Every VM migration follows the same process.

✅ Auditability – Track every step of the migration with Ansible logs.

✅ Security – Ansible integrates with enterprise IAM and RBAC policies.

✅ Scalability – Migrate tens or hundreds of VMs using automation workflows.

🌐 Real-World Use Case

At HawkStack Technologies, we’ve successfully helped enterprises migrate large-scale critical workloads from VMware vSphere to OpenShift Virtualization using Ansible. Our structured playbooks, coupled with Red Hat-supported tools, ensured zero data loss and minimal downtime.

🔚 Conclusion

As cloud-native adoption grows, merging the worlds of VMs and containers is no longer optional. With Red Hat OpenShift Virtualization and Ansible Automation Platform, organizations get the best of both worlds — a powerful, policy-driven, scalable infrastructure that supports modern and legacy workloads alike.

If you're planning a VM migration journey or modernizing your data center, reach out to HawkStack Technologies — Red Hat Certified Partners — to accelerate your transformation. For more details www.hawkstack.com

0 notes